This is part of a series where I’m working towards building a game from scratch. You can find the repo for everything here, and the last part of the series where I did WebSockets is here.

Backtracking, in case you missed any or everything prior to this, I am trying to build everything using only the Rust standard library. Up until this point that wasn’t too difficult, but then I decided that I really want my WebSocket server to be async, and that’s where everything went sideways.

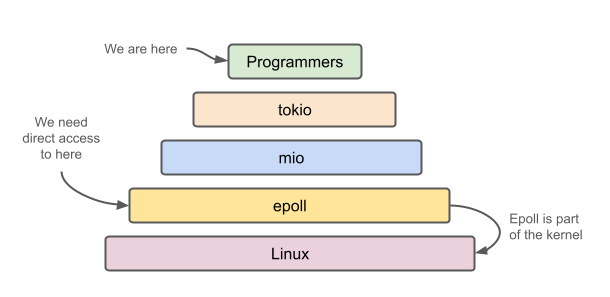

Not the movie sort of sideways, which when I think about it I get a bad taste in my mouth. This sideways was realizing that if I wanted to do async using only the standard library I was going to need to build it on top of epoll or io_uring. These are APIs which allow one to handle input and output operations, such with files and networks, on Linux. The mio library is built on top of epoll, and the most popular async library, tokio, is built on Mio. Mio itself is built on libc using syscalls to talk to the epoll, which is part of the Linux kernel. You can see by their use of a syscall! macro (for Linux), found here.

What this means for us, is that if we want async we’ll need use epoll and build a minimal mio on top of which we’ll build a minimal tokio async runtime. Or, we can choose the other API, io_uring, and build on top of that. For me that sounds like more fun, because io_uring is shiny and new, for one.

To be fair, the decision to use io_uring took a bit of playing around with both it and epoll, and while the latter certainly had more code examples and extensive documentation, that former is where the future is likely going. But before we jump into it, let’s take a short look at these two.

On Linux, the tried-and-true way of creating an async runtime is using epoll. While tokio has tokio-uring built on top of io_uring, the tokio library most people use is built on epoll. But let’s rewind a moment and get our bearings.

You may or may not have heard of epoll, kqueue, IOCP or io_uring, but they’re at the heart of how operating systems handle concurrent I/O operations, such as with reading and writing files and TCP connections. If you’re dealing with CDNs, file servers, database systems and such, then when a file or data is accessed it likely going to make use of one of these queues. The same thing happens when you open a connection to a website and request a page. That being said, it doesn’t always have to use these queues, but probably will in situations with highly concurrent reading and writing of data.

On Linux, everything is associated with a file descriptor, from network connections to actual files, and since we’re focusing on Linux and we’re going to start with epoll and this definition:

The (Linux-specific) epoll API allows an application to monitor multiple file descriptors in order to determine which of the descriptors are ready to perform I/O. The API was designed as a more efficient replacement for the traditional select() and poll() system calls.

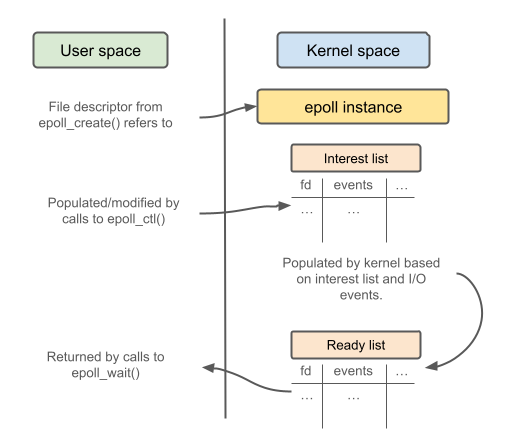

How does it do that? Read the brief introduction in this article gives a good idea. They also have a handy diagram which I’ve redone because I need aesthetic consistency:

To break this down, what happens is:

So, epoll, as part of the OS, is software, and like all software, if something is successful, then people will invariably want to create their own, new and improved version. Enter io_uring, which is an API that does similar things as epoll, and despite the implied snarkiness of my previous sentence is actually in many ways an improvement.

There are a number of differences, but two are that io_uring is asynchronous as opposed to event driven, meaning it doesn’t block on step (4) from above, and it also allows batching of operations. This batching can reduce the number of syscalls, aka calls to the OS, and if you didn’t know, syscalls are costly, and I’m not made of money.

There are of course, many other differences, from how the queues of io_uring differ from the lists of epoll, as well the breadth and depth of what each API can do, but that’s something we’ll unwind as we go through process of building the async runtime.

Before we leave epoll behind for shiny new things, I’d recommend checking out this post which has a nice explanation and working example of epoll. Understanding how it works, and why it works in the way it does, will help you gain a better appreciation of io_uring.

But wait, you may say, don’t leave epoll if you’re building a WebSocket server, since it may still have better performance than io_uring. And yes, you may be right, but reading the comments it seems these are issues which will be addressed. Also, io_uring is a lot younger than epoll and I can only see it getting better as development moves forward. Plus, I get to learn something new and cool that I can show off at dinner parties, and that’s the most important thing.

Here’s what we’ll end up doing in this article:

Now, if you’re familiar with bindgen you may think I’m going to add a crate to my project, which means I’m not just using the standard library as I advertised earlier. And normally that would be true, and it’s possible I may end up doing that if my ad-hoc workarounds don’t pan out in the long run, but for now I’m only going to use the bindgen-cli tool and then just called out to it. Cheating? Maybe, but our TOML will remain as bare as Samuel L. Jackson’s dome (my apologies to Samuel L. Jackson).

There are a few prerequisites you’ll need to satisfy if you want to follow along on your own:

You can check your kernel by using:

uname -rAlternatively, use a Linux container, make sure it has web access, and ssh in and go crazy. With that out of the way, let’s get started and install liburing, the library that will help us with io_uring. The first things we’ll do is check if it’s already installed:

ldconfig -p | grep liburingIf you get something back you can try it with those existing libraries, or carry on to the next section where we install the latest from source. I found this to be the most reliable, as the default apt repository has an older version. Also, you can refer to the original source if need be.

If you already have the library installed, but want to install the latest version you can do the following to remove it:

sudo apt remove liburing-dev liburing2

sudo apt autoremove

ldconfig -p | grep liburingThe last line here just verifies it’s gone. If you’re still getting something when it’s run, then you may need to go the folder /usr/lib and /usr/include and manually remove all liburing associated .so and header files. It’s also possible that your files are in another folder if you’re using a different flavor of Linux, so make sure to look at the ldconfig output.

Next, make sure you have the build tools in order to compile from source:

sudo apt install build-essentialWhen done, you can clone the repo, configure and make. There are directions in the repo which are more specific, but I got away with the following and it worked fine:

git clone https://github.com/axboe/liburing

cd liburing

./configure

make

sudo make install

sudo ldconfigThe last line with ldconfig is what updates your shared library (.so - shared object) cache and makes it recognize the presence of liburing. After it’s run you can check that it’s all there by either going to /usr/lib and /usr/include and seeing for yourself, or running the following:

ls /usr/lib | grep "liburing"

ls /usr/include | grep "liburing"You should see multiple files in each folder. If not, you’ll need to check if it’s in another folder or there was an error along the way.

We’ll be using bindgen, which is a command-line tool that:

automatically generates FFI bindings to C and C++ libraries.

If you’re not familiar with FFI (foreign function interface), it’s a way to call libraries in other languages. Lots of stuff has been written in C/C++ and has been extensively tested, so rather than reinventing the wheel we build on top of it. Languages like Python make heavy use of C libraries with packages like Numpy and Pandas. This is primarily done for performance reasons, and Python calls out to them for a lot of the heavy lifting when it comes to CPU intensive tasks.

Essentially, what we want to end up with are some shells of Rust functions which wrap the underlying library functions. They will look something like this when all is said and done:

extern "C" {

pub fn sendmsg(

__fd: ::std::os::raw::c_int,

__message: *const msghdr,

__flags: ::std::os::raw::c_int,

) -> isize;

}Let’s install bindgen and get started then:

cargo install bindgen-cliOnce we have this, we’re going generate are bindings in two ways:

The latter is the correct way of doing things, but we’ll do the first method just so we can see how it all works.

The first thing you’ll need to do is in the root of your project create a file called wrapper.h. The name doesn’t matter and you can call it headers.h or whatever you want. Your project folder will look something like this:

├── Cargo.lock

├── Cargo.toml

├── wrapper.h

├── src

│ └── main.rs

└── targetIn wrapper.h we’re going to list the liburing C header file we want to bind:

#include "/usr/include/liburing.h"There are other header files, but bindgen should recursively check all files which are also include in liburing.h, such as io_uring.h and barrier.h. Once you have added wrapper.h, you can now run bindgen from the project folder:

bindgen wrapper.h –output bindings.rsAfter running, you can open up bindings.rs, which should be alongside wrapper.h, and see approximately 10k lines of code. Unfortunately, it won’t have captured everything in liburing. You can check this by opening the original liburing.h and finding this function:

IOURINGINLINE void io_uring_cq_advance(struct io_uring *ring, unsigned nr)You will not find this in the generated file, the reason being that it’s an inline function, as denonted by IOURINGINLIEN. We need to explicitly tell bindgen to include inline functions. You may be wondering why it wouldn’t bind them by default? According to the docs:

These functions don’t typically end up in object files or shared libraries with symbols that we can reliably link to, since they are instead inlined into each of their call sites. Therefore, we don’t generate bindings to them, since that creates linking errors.

In our case we shouldn’t have that problem, or at least I haven’t thus far, so we’re going to force bindgen to include them by instead running the following:

bindgen wrapper.h --experimental --wrap-static-fns --output bindings.rsNow you can search through bindings.rs and you should find the io_uring_cq_advance function included. To use these bindings you can move the bindings.rs file into your src/ folder, and then in main.rs add:

pub mod bindings;

use bindings::*;

fn main() {

// Nothing here yet

}After that you’ll get a gazillion errors about naming. To suppress these, slap this at the top of the bindings.rs file:.

#![allow(non_upper_case_globals)]

#![allow(non_camel_case_types)]

#![allow(non_snake_case)]While you won’t get any visible errors via your editor when using these functions, you will get them when you try to build, and this is because you haven’t yet linked the actual library. I’d suggest trying to build, just so you can see what the errors look like.

To fix this problem, create a build.rs file in your project folder alongside the wrapper.h and add the following to it:

fn main() {

println!("cargo:rustc-link-search=native=/usr/lib");

println!("cargo:rustc-link-lib=dylib=uring");

}The build.rs script, which you can read more about here, is a specially named script that is run in order to link to external libraries such as liburing. I couldn’t find a satisfactory reason for using println! to do this, but there does seem to be discussions and RFCs centered around the build process and the choice of using prints.

These print statements add /usr/lib to the search path, and then link to liburing. It’s weird that what the print statement ACTUALLY says, is to link to uring, when the library is called liburing. The convention on Linux is to prefix it libraries with lib, so just take that as it is, I suppose.

The dylib portion of the print statements mean we’re dynamically linking as opposed to statically linking, which means that if we were to distribute this as is, the other person would need to have this library installed. At this point, with everything in place, you should be able to run cargo build with only a few warnings about unused imports.

Note: At this point you’ll be able to use functions and types which are not inline, but inline functions will likely still fail. We’ll fix that in the next section.

As mentioned, there is a better way of doing things, and that’s through expanding our build.rs script. The reasons for using the build script and not the manual method come down to:

There are other reasons, but let’s just say that for now, it’s a more sustainable method and get on with the show.

Let’s first modify our build.rs script to look like the following:

use std::env;

use std::path::PathBuf;

use std::process::Command;

fn main() {

println!("cargo:rustc-link-search=native=/usr/lib");

println!("cargo:rustc-link-lib=dylib=uring");

println!("cargo:rerun-if-changed=wrapper.h");

let out_path = PathBuf::from(env::var("OUT_DIR").unwrap());

// Generate bindings using command-line bindgen

let bindgen_output = Command::new("bindgen")

.arg("--experimental")

.arg("--wrap-static-fns")

.arg("wrapper.h")

.arg("--output")

.arg(out_path.join("bindings.rs"))

.output()

.expect("Failed to generate bindings");

if !bindgen_output.status.success() {

panic!(

"Could not generate bindings:\n{}",

String::from_utf8_lossy(&bindgen_output.stderr)

);

}

}While the first two lines are familiar, we’ve added quite a bit, but if you look closely you’ll find that With the exception of out_path, the bindgen statement is doing the same thing we did at the command line earlier. Let’s take a look at the unfamiliar parts.

First, there’s out_path, which is a environment variable that is created by cargo upon compiling. It tells us where our build is located, and we use this for our bindings.rs.

Second, the Command statement is running our bindgen statement as if it were at the command line, and then throwing an exception if it fails.

Third, if the statement returns as unsuccessful, we spit out an error message along with whatever error was provided in the Output object returned from Command.

Still, this doesn’t 100% work, as those inline functions are still going to gives us difficulty. All we’ve really done here is take the command line version and expand it in our build script.

This is where things get a bit more difficult, as if they haven’t been up to this point. When we run –wrap-static-fns, it will create wrappers around the inline functions for us, but they’re done C and output to an extern.c file. In fact, if you find this file yourself in /tmp/bindgen and delete it, you can then regenerate it using the command from the earlier section:

bindgen wrapper.h --experimental --wrap-static-fns --output bindings.rsSince we can’t directly use extern.c in our program, we need to compile it so that we can link to the object (so) file. The easy way of doing that is to the cc crate, and do the following:

cc::Build::new()

.file(&extern_c_path)

.include("/usr/include")

.include(".") // To find wrapper.h in current directory

.compile("extern");But, doing that will ruin our unscathed cargo.toml, so instead we’ll do it ourselves using gcc in a somewhat more complex manner:

use std::env;

use std::path::PathBuf;

use std::process::Command;

fn main() {

println!("cargo:rustc-link-search=native=/usr/lib");

println!("cargo:rustc-link-lib=dylib=uring");

println!("cargo:rerun-if-changed=wrapper.h");

let out_path = PathBuf::from(env::var("OUT_DIR").unwrap());

let extern_c_path = env::temp_dir().join("bindgen").join("extern.c");

// … previous bindgen code goes here

// Compile the generated wrappers

let gcc_output = Command::new("gcc")

.arg("-c")

.arg("-fPIC")

.arg("-I/usr/include")

.arg("-I.")

.arg(&extern_c_path)

.arg("-o")

.arg(out_path.join("extern.o"))

.output()

.expect("Failed to compile C code");

if !gcc_output.status.success() {

panic!(

"Failed to compile C code:\n{}",

String::from_utf8_lossy(&gcc_output.stderr)

);

}

// Create a static library for the wrappers

let ar_output = Command::new("ar")

.arg("crus")

.arg(out_path.join("libextern.a"))

.arg(out_path.join("extern.o"))

.output()

.expect("Failed to create static library");

if !ar_output.status.success() {

panic!(

"Failed to create static library:\n{}",

String::from_utf8_lossy(&ar_output.stderr)

);

}

// Tell cargo where to find the new library

println!("cargo:rustc-link-search=native={}", out_path.display());

println!("cargo:rustc-link-lib=static=extern");

}Here’s what we’re doing:

I believe step (1) is easy enough to understand, and we’ve already done (2) above, so we’ll start with (4) and the options passed to the compiler:

The rest of the code deals with our output path, which should be easy enough to follow. Now, I’m not entirely sure the purpose of fPIC, but I don’t feel it’s important outside that it’s used for dynamic linking.

In the second part, where we create a static library, we use ar, which is an archive tool used for libraries. We pass it crus which is actually four commands:

There’s a bit more nuance to these commands that you can read about here, but to summarize, it’s building an archive (.a) and then an object file (.o) that will statically link to. At this point you should be able to compile without errors.

Now that we’ve create bindings, we’re going to do a quick and dirty demo, and in the next article we’ll build out something which is more full featured. To start, you’ll want to add this to your main.rs:

#![allow(non_upper_case_globals)]

#![allow(non_camel_case_types)]

#![allow(non_snake_case)]

#[cfg(not(rust_analyzer))]

include!(concat!(env!("OUT_DIR"), "/bindings.rs"));

use std::io;

use std::mem::zeroed;

use std::ptr::null_mut;The initial macros will ignore errors we don’t care about. The next two lines after that are bit odd. What they do is make sure we’re connecting to our bindings, and you can think of it like a pub mod statement, but instead reaching into the build folder to find the files instead of src. In in this case it’s using the OUT_DIR environment variable mentioned earlier in order to find the bindings.rs file. The first of these two lines - the macro above the include - line is just a way to shut up the errors you may get, depending on your editor. Without that it will still compile just fine, but you may see an error in your editor.

If you have no errors, then we have successfully created bindings to io_uring and are ready to create basic example, but before we do that we should take a look at actually what io_uring is, and why we need it.

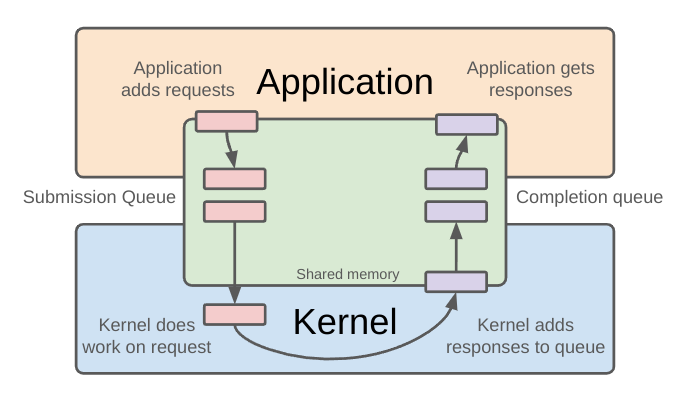

At this point you may have realized that we’ve been so busy installing libraries and such that we never got past the basics of io_uring to understand how the API works. I’d recommend reading this and this to get a deeper understanding. For now, I’ll borrow this diagram from the second link in order to give the quick description:

With io_uring you have both a submission and completion queue, which is similar to the interests and ready lists from epoll, with one major difference. With epoll, the lists are maintained by the kernel, in kernel space, whereas the the queues are located in a shared memory space with io_uring. The benefit of this model is that you don’t need to make as many syscalls, especially when updating the status of items on the queue and such. This means there is less context switching overall.

The process for io_uring is mostly the same, as least conceptually. You add what are referred to as submission queue entries or SQEs to the submission queue and get the completed work off the completion queue, just like adding and retrieving items in epoll. Normally these entries are going to involve waiting on I/O, whether a file read/write or network traffic, though you can also use it for other things, which I won’t get into here for the sake of keeping an already long article from getting too long.

After adding an entry, you have an option of whether to block and wait on the completion queue entries (CQEs) with io_uring_wait_cqe, wait with a timeout, or poll using io_uring_peek_cqe. No matter which you do you’ll eventually get either a response of a timeout and continue adding and getting items.

This is a hand wavy sort of description of what’s going on, and there is a lot more to it, as we’ll see in future articles, but for now the image above provides a good visualization to take with you.

For this final bit, we’re going to use our bindings to create a simple queue and thrown something on it and get something back. We’ll be doing the following:

Let’s start by looking at the flow of our main function:

fn main() -> io::Result<()> {

let queue_depth: u32 = 1;

let mut ring = setup_io_uring(queue_depth)?;

println!("Submitting NOP operation");

submit_noop(&mut ring)?;

println!("Waiting for completion");

wait_for_completion(&mut ring)?;

unsafe { io_uring_queue_exit(&mut ring) };

Ok(())

}In main.rs we have first have this, which sets up the queue:

let queue_depth: u32 = 1;

let mut ring = setup_io_uring(queue_depth)?;Looking at the function setup_io_uring we have the following:

fn setup_io_uring(queue_depth: u32) -> io::Result<io_uring> {

let mut ring: io_uring = unsafe { zeroed() };

let ret = unsafe { io_uring_queue_init(queue_depth, &mut ring, 0) };

if ret < 0 {

return Err(io::Error::last_os_error());

}

Ok(ring)

}We take in the depth of the queue, which in main we set to 1, and then return an io_uring type. This type comes from our bindings and looks like this:

pub struct io_uring {

pub sq: io_uring_sq,

pub cq: io_uring_cq,

pub flags: ::std::os::raw::c_uint,

pub ring_fd: ::std::os::raw::c_int,

pub features: ::std::os::raw::c_uint,

pub enter_ring_fd: ::std::os::raw::c_int,

pub int_flags: __u8,

pub pad: [__u8; 3usize],

pub pad2: ::std::os::raw::c_uint,

}This is populated via the io_uring_setup function, which is called from within io_uring_queue_init. You can find both of these in the bindings. This will set the size of the submission queue (sq) to 1 and then automatically set the completion queue (cq) to twice that, so 2. The completion queue needs to have a larger size than submission. Also note that we’ve zeroed out params, setting them to their defaults.

We’ll be using unsafe wrappers throughout, and that’s because we’re calling out to the non-Rust world, and no guarantees can be made. We have to trust the authors of the libraries in this case.

Getting back to main have our ring variable, which is really composed of the two rings (submission and completion), we’re going to pass that to submit_noop which does the following:

fn submit_noop(ring: &mut io_uring) -> io::Result<()> {

unsafe {

let sqe = io_uring_get_sqe(ring);

if sqe.is_null() {

return Err(io::Error::new(io::ErrorKind::Other, "Failed to get SQE"));

}

io_uring_prep_nop(sqe);

(*sqe).user_data = 0x88;

let ret = io_uring_submit(ring);

if ret < 0 {

return Err(io::Error::last_os_error());

}

}

Ok(())

}What is happening here is we:

Aside from io_uring_prep_nop, there are a large number of other prep functions, such as reading and writing from files or TCP connections. I’d recommend a quick search to see what is available. We’ll be using some of these in later articles.

The last thing we’ll do is wait for our NOOP to complete:

fn wait_for_completion(ring: &mut io_uring) -> io::Result<()> {

let mut cqe: *mut io_uring_cqe = null_mut();

let ret = unsafe { io_uring_wait_cqe(ring, &mut cqe) };

if ret < 0 {

return Err(io::Error::last_os_error());

}

unsafe {

println!("NOP completed with result: {}", (*cqe).res);

println!("User data: 0x{:x}", (*cqe).user_data);

io_uring_cqe_seen(ring, cqe);

}

Ok(())

}Here we create an immutable pointer to io_uring_cqe, which is a struct that looks like this:

pub struct io_uring_cqe {

pub user_data: __u64,

pub res: __s32,

pub flags: __u32,

pub big_cqe: __IncompleteArrayField<__u64>,

}This is a completion queue entry, and we’re going to pass it to io_uring_wait_cqe, which after it has completed the prior submission will store that completion data at the location indicated by the pointer.

For now, we’ll only concern ourselves with the user_data field, which we print out after we’ve checked that the return value from io_uring_wait_cqe doesn’t indicate failure (ie. it’s actually pointing to something).

The last thing which happens here is we call io_uring_cqe_seen to inform io_uring that we’ve seen this completion. If you look at the docs, this has to be done or the completion queue’s slot can’t be reused.

Finally, we head back to main and then exit the queue:

unsafe { io_uring_queue_exit(&mut ring) };This function will release the resources and unshackle you from the burdens of ring management.

So that’s it, just a few thousand words accompanied by a page or two of code is all you need to create your own bindings to liburing and an io_uring queue. And this, at least judging by what I’ve been working on for the next section, is the easy part.

We’re still a long ways off from an async server, but we’re getting closer!